FEC On Nvidia L4

5G NR PHY FEC Acceleration on NVIDIA L4 — High Performance, Low Power

Build carrier-grade 5G NR on COTS hardware with an Arm server and NVIDIA L4 GPU. Our CUDA C–based FEC pipeline delivers deterministic latency, high throughput, and outstanding energy efficiency—ideal for edge and dense deployments.

Why NVIDIA L4 for 5G PHY

- 72W TDP with passive cooling: optimized for DU power/thermal envelopes.

- Low-profile PCIe Gen4: fits compact edge servers without airflow challenges.

- AI-ready: co-locate RAN PHY and near-RT AI on a single GPU to future-proof the platform.

Proven Results (Lab Validated)

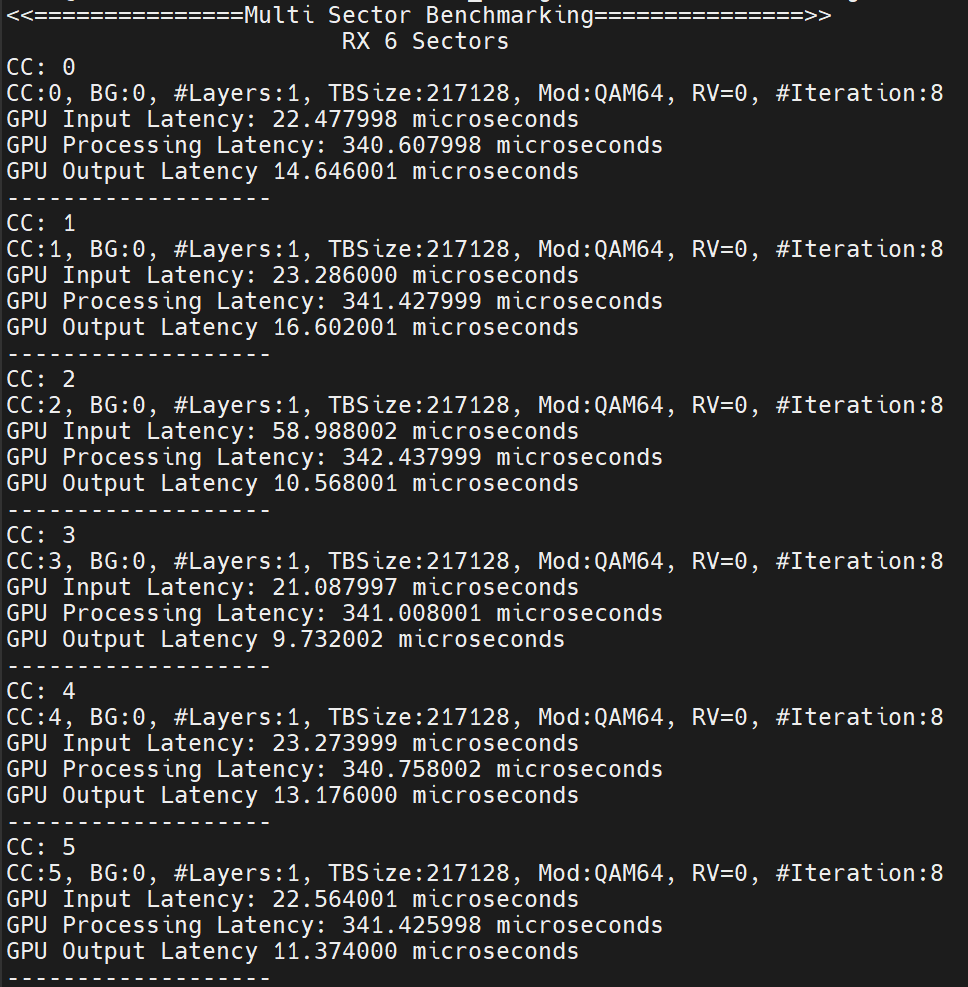

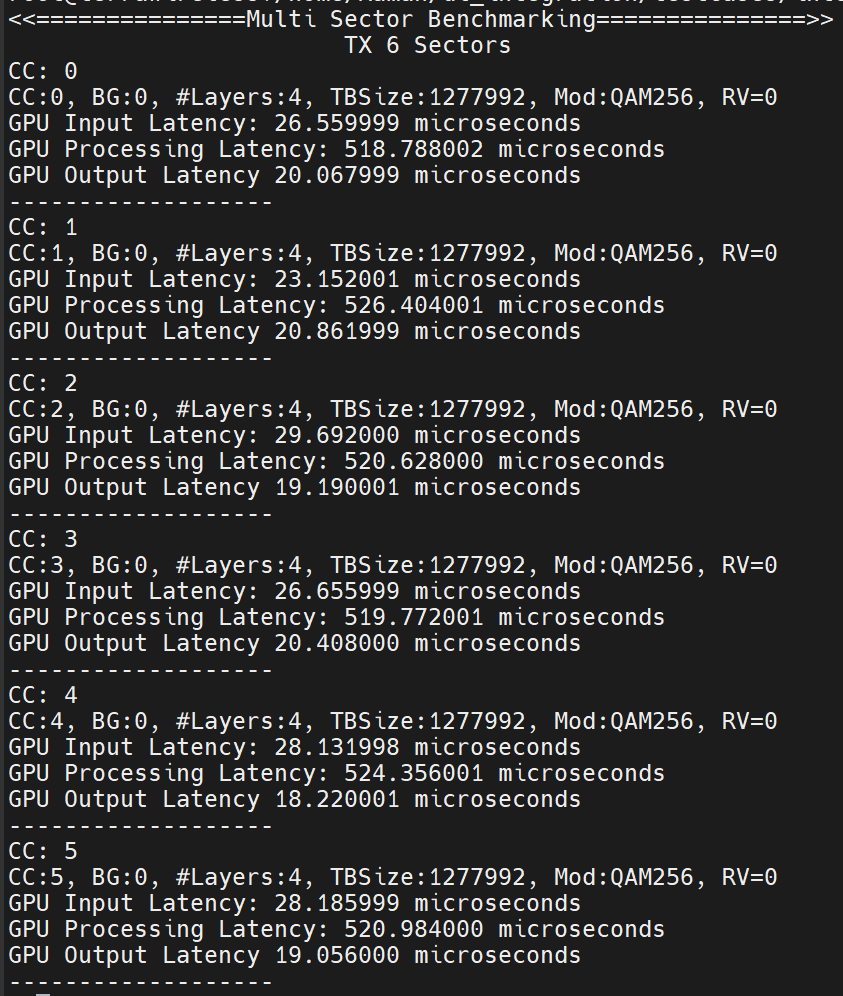

- Configuration: 6 sectors (6CC) for Peak Throughputs, 100MHz bandwidth, 4T4R, μ=1, TDD pattern DDDSU.

- Downlink throughput: 2.555Gbps per sector peak. Uplink throughput: 100Mbps per sector full throughput.

- PCIe transfers: ~20µs per CC both ways (config + data), overlapped via multiple CUDA streams.

- TX (bit processing): ~500µs per CC (CB segmentation, CB CRC, LDPC encode, rate match with filler handling, CB concat).

- RX (bit processing): ~350µs per CC (CB segmentation, rate recovery with filler handling, LDPC decode, CB CRC).

These metrics allow to meet μ=1 slot deadlines.

What’s Inside the CUDA C Kernel Pipeline

3GPP-compliant FEC:

- LDPC encode/decode (BG1/BG2), rate match/recovery including filler bits, CB segmentation/concatenation, CRC.

- LDPC Decoder:

- Early termination based on CRC results, Configurable iterations

- KPIs: Code blocks wise CRC result and iterations output.

Deterministic scheduling:

- CUDA Streams per sector/CC.

- Pinned memory for async transfers

Operator-grade readiness:

- Designed for integration with O-RAN split 7.2a DU pipelines and PTP-timed fronthaul.

Benefits at a Glance

- Deterministic sub-ms latency: meet μ=1 timing with margin.

- Superior perf/W: carrier throughput at just 72W GPU power envelope.

- Scalable and flexible: add sectors/CCs with parallel streams/additional cards —no hardware respins.

- Start with 6x100MHz per L4 and scale linearly if needed; future releases and kernel optimizations increase density over time, aligned with industry trajectories.

- AI-native: GPU keeps FEC acceleration and adds AI-native capability on the same card, improving utilization and long-term ROI.

- Examples: Near-RT RIC, run channel prediction, precoder selection, and scheduler optimization alongside PHY – still resource left on the card.

Deployment Options

- COTS server (Arm Ampere or x86) + NVIDIA L4 GPU (passive, low-profile, PCIe Gen4).

- Edge-optimized chassis supporting passive-cooled GPUs with sufficient airflow.

Our Services

- Performance PoC: single- and multi-sector bring-up with detailed KPIs.

- Integration: O-RAN 7.2a DU fronthaul endpoint and lab interop.

- Optimization: kernel tuning, stream scheduling, and RDMA enablement.

- AI add-ons: near-RT inference for RAN optimization on shared GPU resources.

Looking to modernize 5G PHY with a low-power, low-cost, software-defined platform? Contact us for a demo, performance brief, or a tailored PoC plan

Get in Touch