5G NR High PHY

5G NR FR1 ORAN 7.2x High PHY – on Arm Neoverse N1/N2 & Nvidia L4 GPU

5G FR1 DU Layer 1 System Parameters

For Receiver Algorithm Performance Results 👉 https://4g5gconsultants.com/pusch-ip/

5G NR Physical Layer

- Server Grade Layer 1 on COTS hardware Arm – Neoverse N1/N2

- Interfaces: Supports ORAN 7.2 split (South bound) and FAPI Interface (North bound)

- Scalable – No of Users & CC using Core-Thread Scheduler Framework

- Low Latency Light Weight Task Scheduler “Core-Thread Scheduler”

- Core Utilization: Matches the popular Server Grade solutions available in the market

- 2 cores per CC , 100MHz, 4T4R, Numerology 1 – Neoverse N1 (Armv8 AArch64 with 128‑bit NEON SIMD vector)

- 1.5 core per CC – Neoverse N2 (Armv9-A core with SVE2)

- Power Efficiency Arm Ampere

- Power per core is only 2W – 4W per CC

- Enough cores in single NUMA to house all RAN components – L1, L2, L3 and 5G Core

- TCO

- Ampere Altra ARM processor – lower price per core and power efficiency per core

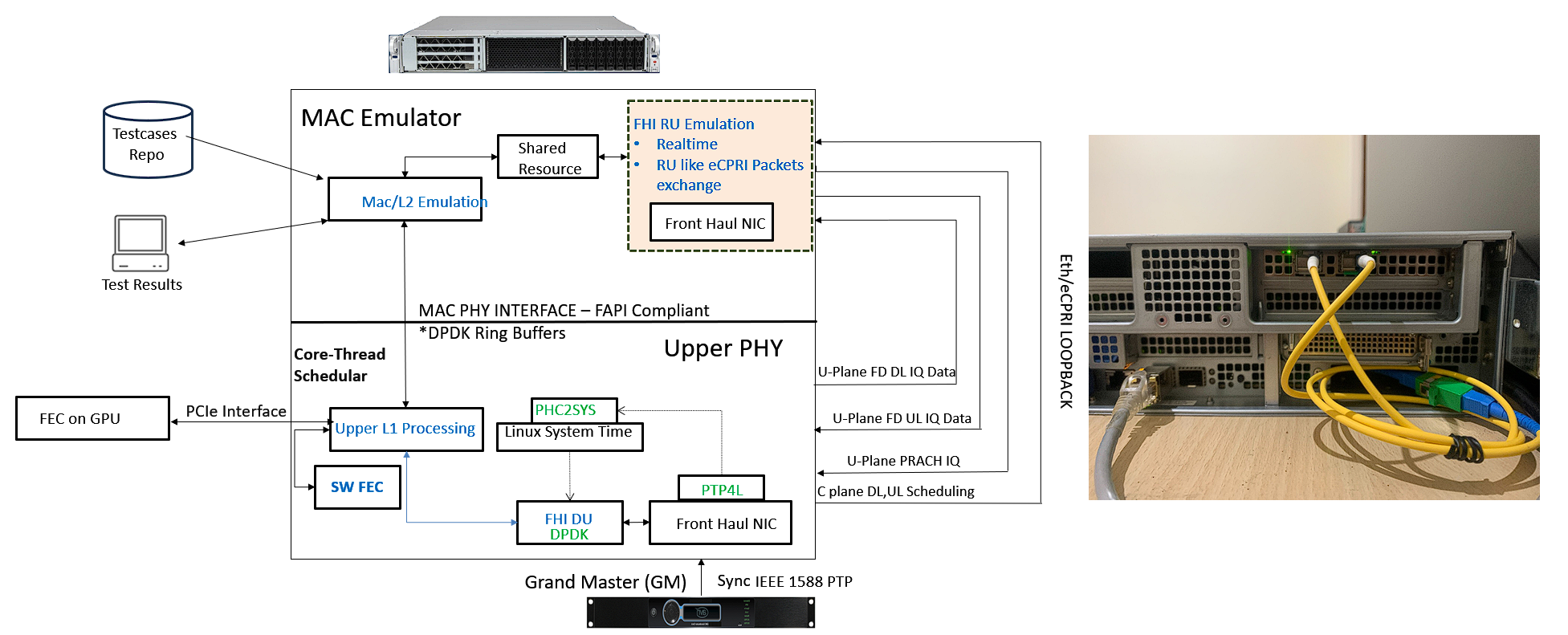

Mac Emulator – High PHY – Real time Test Framework

4G5G’s MAC Emulator enables real‑time L1/PHY validation with carrier‑grade rigor.

- SFP/eCPRI loopback to a RU emulator for timing, payload integrity in UL and IQ samples integrity in DL, and slot‑accurate UL/DL verification across O-RAN 7.2a flows.

- Nightly automated regression executing several hundred curated test vectors, with pass/fail dashboards and artifact retention.

- Upper‑PHY receiver algorithms validated for 3GPP TS 38.104 conformance, including channel estimation, equalization, and measurements.

- Supports FAPI/nFAPI‑style interfaces, slot indication timing, and per‑CC configuration to stress mixed MCS, MIMO layers, and transport sizes.

- Deterministic latency profiling with per‑stage timestamps (FFT, Channel estimation, Eq, LDPC FEC) and thresholds for real‑time deadlines.

- CI-integrated: triggers on commits, generates trace logs and KPIs (throughput, BLER, CPU/GPU utilization, IQ Samples correctness EVM).

- Scalable execution: parallel multi‑cell, multi‑carrier runs to validate core‑usage per CC, memory footprint, and PCIe/NIC throughput margins.

- Plug‑in vector framework: import from lab or field captures or channel simulators or Matlab dumps; export failures with minimal repro harness for rapid root‑cause. Json based test parameters configs, binary file IQ samples.

4G5G’s Core-Thread Scheduler

4G5G’s Core – Thread scheduler is Deterministic, lock‑free, run‑to‑completion, scheduling-priority and deadline aware. Delivering predictable latency and clean per‑core utilization for real‑time telecom workloads.

Core‑Thread Scheduler is a lightweight API framework for scheduling periodic tasks by priority and execution deadlines across a defined CPU‑core set.

- Each core runs a distributed worker that acts as both producer and consumer, dispatching work to itself or peer cores for balanced utilization.

- Priority‑ordered queues serve higher‑priority items first; among equals, older pending tasks take precedence to minimize starvation.

- Lock‑free atomic queues provide ultra‑light enqueue/dequeue paths, keeping overhead low under high concurrency.

- Tasks are run‑to‑completion with no preemption or context save/restore, yielding predictable latency and a cache‑friendly execution model.

- Built‑in telemetry captures task IDs, execution latency, and per‑core utilization for observability and post‑mortem analysis.

- APIs support task splitting, priorities, deadlines, and CPU‑set affinity for deterministic placement in real‑time pipelines.

- Cores can be added or removed on the fly, enabling elastic scaling without service interruption.

- Adaptive work partitioning can split tasks dynamically by symbol, half‑slot, Tx/Rx direction, or number for user wise to match workload.

- Early‑exit conditions allow prompt termination of task chains when goals are met or guards trigger.

- Tight CUDA integration triggers GPU kernels and collects results asynchronously, avoiding CPU stalls while kernels execute.

Core-Thread Scheduler – Task Flow Example

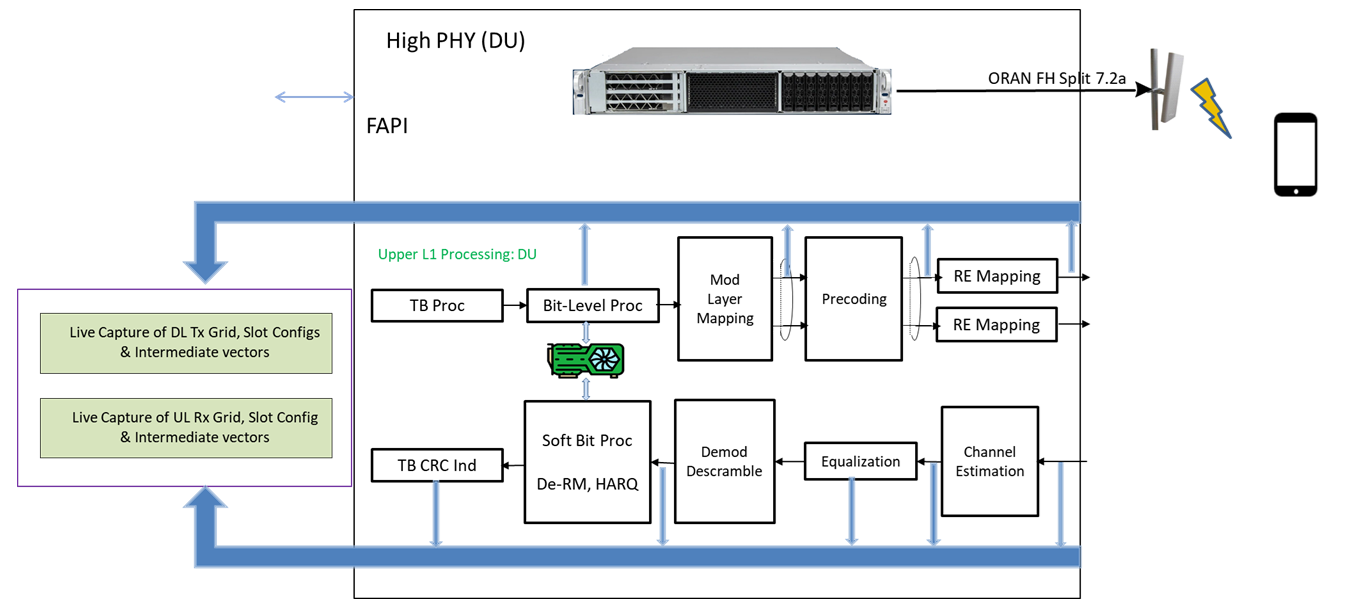

AI‑ready PHY data capture for training‑grade, real‑time telemetry

With AI-RAN on the horizon, high‑fidelity, real‑time data pipelines are essential to capture configurations and signals across PHY stages in lab testbeds and live networks, enabling trustworthy training datasets for AI models.

Neural network–based channel estimation, for example, benefits from labelled data spanning 3GPP channel models with diverse delay spreads, Doppler, SNR ranges, PUSCH allocations, layers, and bandwidths to ensure robust generalization.

The PHY framework is designed for continuous, in‑band telemetry without perturbing real‑time schedules:

it captures DL/UL configuration parameters, IQ samples at multiple processing stages, transport blocks, and CRC outcomes while the receiver/transmitter runs at full throughput.

A low per message overhead with lock-free queues, non‑blocking I/O ensures no backpressure and no interference with normal PHY processing. Configurable triggers and JSON‑driven profiles let operators choose capture points (e.g., post-FFT Frequency domain full grid or Only DMRS, post‑channel‑estimation, post Equalization, pre/post FEC, CRC results, Transport blocks) and producing synchronized artifacts suitable for supervised learning, validation, and site-specific fine-tuning of AI-native receivers all without stalling real‑time threads.

With integrated GPU acceleration, the framework is ready for AI‑RAN, supplying the compute needed for low‑latency model inference alongside baseband workloads on shared infrastructure.

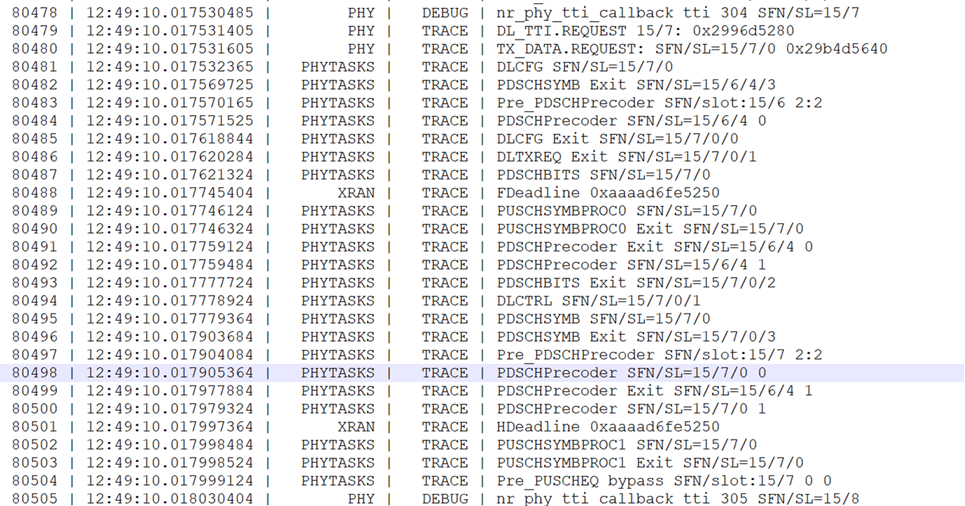

Real time Fast logger – Text Format Logs

4G5G’s Real‑time, Fast logger is engineered for PHY engineers/System integrators to make high‑throughput, multi‑core systems observable without sacrificing determinism. It delivers readable, structured records with precise timestamps, task IDs, core affinity, and stage markers that map directly to L1/PHY pipelines, while keeping overhead minimal

- 6 severity levels: FATAL, ERROR, WARNING, INFO, DEBUG, TRACE – with runtime adjustability to tune verbosity without redeploying.

- Simple integration via header include or minimal API hook; suitable for tracing third‑party libs such as xRAN, ArmRAL, etc.

- printf‑style formatting with integer arguments (char, short, int, 64‑bit int, pointers) and constant C‑strings.

- Built‑in rotation: split output into multiple files; max file size is configurable at init.

- Timestamped entries with date and time; precision bounded by the CPU’s Time Stamp Counter.

Real-time ORAN-Fronthaul packet capture

- Real‑time ORAN fronthaul packet capture: capture eCPRI/O‑RAN C/U/S‑plane traffic inline without perturbing PHY deadlines, preserving slot/symbol timing while the DU continues high‑throughput operation.

- Operator‑ready artifacts: outputs PCAP file. Enable/disable via a simple JSON config for DL and UL. Avoids reliance on expensive, specialized capture hardware.

- Why this matters: O‑RAN split 7‑2x pushes significant low‑PHY into the RU—FFT/IFFT, PRACH, digital beamforming, and IQ compression/decompression with jumbo frames—making DU↔RU interoperability and timing windows sensitive to even small instrumentation overheads

- Jumbo frame awareness: the capture path supports MTUs up to typical O‑RAN jumbo sizes so compressed IQ payloads and sectionized PRBs are recorded intact for later reconstruction and analysis

- Header‑accurate decoding: records the Radio Application Header, Section Application Headers, and optional Compression Header, retaining frame/subframe/slot/symbol identifiers and PRB mapping needed for precise time correlation to RF events.

- Compression insight: offline tool decompresses BFP to recover payload and anomaly detection across U‑plane flows.

- Wireshark workflow: an offline parser leverages the eCPRI and O‑RAN fronthaul dissectors to reconstruct message types, section layouts, and IQ payloads, enabling filters like ecpri.type==0x00 and CUS‑plane sub‑dissection for focused triage

- Timing correlation: captures preserve frameId, subframeId, slotId, and symbolId so logs can be aligned with DU transmit/receive windows for delay‑budget verification per 7‑2x guidance.

- RU complexity called out: by moving precoding/beam ID signaling and FFT/IFFT into the RU, bandwidth on the fronthaul can be reduced but testing burden rises, necessitating packet‑accurate capture for multi‑vendor interoperability.